Instagram has introduced several new safety features aimed at protecting teenagers on its platform. These changes give parents more oversight and control over their child’s activities (below 16 years old), as Meta attempts to address growing concerns about online safety for younger users.

What Are Instagram New Teen Features?

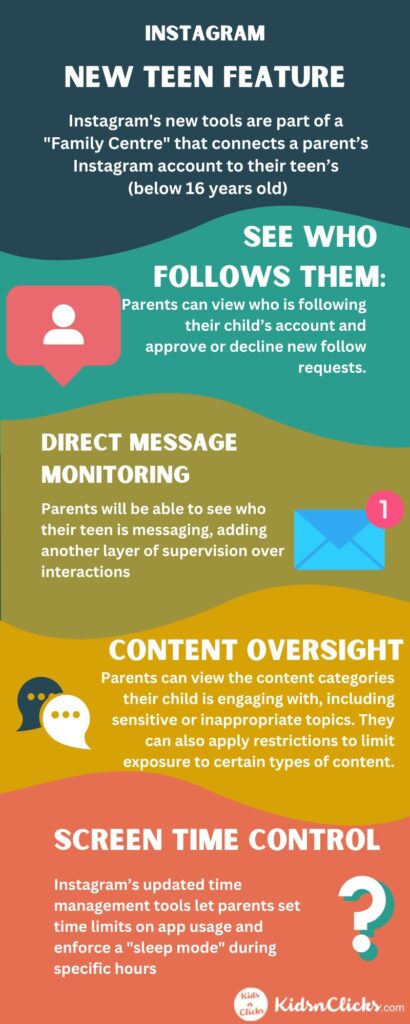

Instagram has rolled out a feature called the “Family Centre,” which allows parents to link their accounts to their child’s Instagram profile. Here are some key features:

- Privacy Settings

- All teen accounts are set to private by default.

- Users under 16 need parental consent to change privacy settings.

2. Messaging Restrictions

- Teens can only message people they follow or are connected to.

- Parents can view who their child has messaged in the past week.

3. Content Limitations

- Stricter content settings are applied, limiting exposure to sensitive material.

- Teens can choose age-appropriate topics they want to see more of.

4. Time Management

- A “Sleep Mode” mutes notifications between 10 PM and 7 AM.

- Parents can set daily time limits for app usage. Parents can even block access to Instagram once the time limit is reached

- Teens will get notifications telling them to leave the app after 60 minutes each day

5. Parental Controls

- Enhanced tools allow parents to monitor their teen’s activity.

- Parents can see the topics their teen engages with most frequently

- Parents can connect their account to their teens Instagram account

- Teens under 16 will need parental permission to adjust their settings. Teens aged 16-17 will have these features enabled by default (which is great), but they can turn them off without needing parental approval.

Meta says that teens under 16 need to have their parents set up supervision on Instagram to change certain settings. For example, if a 15-year-old wants to change their privacy settings, their parent has to approve it first.

For teens 16 and older, they can invite their parents to supervise if parents want more control. Once supervision is set up, parents can approve or deny requests, like if a 17-year-old wants to turn off a safety feature. Soon, parents will be able to make changes directly to keep things safer.

These tools aim to give parents more control over their children’s social media usage while keeping teens safer in a digital environment that has been difficult to police.

Criticism of the New Features

Despite the updates, many experts, including parents and child safety advocates, have raised concerns about whether these changes are enough to protect teens effectively.

1. Age Verification Issues

Meta is making sure teens don’t lie about their age to avoid safety features. For example, if a teen tries to make an account with a fake adult birthday, Meta will ask them to prove their real age. This could mean showing an ID or taking a video selfie. Meta is also creating new tools to find teens who may have lied about their age and will automatically put them in safer settings.

Basically, Meta treats everyone creating a new account like they’re a child unless they prove otherwise with an ID or selfie. This is a good step.

Some kids might try to get around this by setting their age to 16, which turns off the safety features by default.

Meta is also using AI to find accounts that might be run by kids under 16. If the system thinks someone is lying, they’ll have to prove their age with the video selfie process.

2. Burden on Parents

Critics argue that Instagram is shifting too much responsibility onto parents. While the new features give parents more oversight, they also require parents to be actively engaged on the platform and understand the various controls—something many may not have the time or knowledge to do.

As Meta’s president of global affairs, Sir Nick Clegg, recently admitted, many parents simply don’t use these tools, leaving children without the level of supervision intended by these updates.

3. Inadequate Protection from Harmful Content

While Instagram’s new controls limit sensitive content for teens, harmful material still finds its way through algorithms designed to maximize engagement, especially for younger users. However, we are yet to see if the new feature will really solve this problem.

4. Teens’ Resistance to Oversight

Many teenagers are likely to resist these changes. The ‘cringe factor’ of being monitored by parents or having a “teen account” could drive them to find ways around the restrictions, such as creating adult accounts.

Teenagers often want to be treated like adults, and these safety measures may make them feel singled out, increasing the risk of them seeking riskier ways to access the platform.

Meta’s Targeting of Teenagers: A PR Move?

A recent article from the Financial Times highlights Meta’s strategy to target teenagers more aggressively as part of its broader growth strategy.

With platforms like TikTok capturing younger audiences, Meta has been pushing features and content aimed at teens, trying to maintain their market share.

This raises the question: are these new safety features for teens more about protecting kids or protecting Meta’s business?

Meta’s efforts to introduce more parental controls come at a time when the company is facing increased scrutiny from governments worldwide.

In the U.S., the upcoming Kids Online Safety Act (KOSA) aims to hold social media companies accountable for failing to protect young users.

The timing of Instagram’s updates, just before key legislative votes, has led some to wonder whether these changes are more about avoiding penalties under future regulations than truly keeping teens safe.

What Does This Mean for Parents?

While Instagram’s new features offer some additional protections, they come with significant challenges. Here’s what parents need to keep in mind:

- Parental Involvement Is Crucial: Instagram’s tools are only effective if parents actively use them. It’s essential to stay involved in your child’s digital life, but this also means learning how to navigate Instagram’s complex safety settings.

- Delayed Social Media Access: If possible, delay giving your teen access to social media until they are mature enough to handle it. This gives them time to develop critical thinking skills that can help them navigate online risks.

- Open Conversations: Regularly talk to your teen about the dangers of social media, including cyberbullying, online predators, and the manipulative nature of algorithms. Open communication can help them better understand the need for safety measures.

- Teach Media Literacy: Help your teen develop the ability to assess the content they encounter online. Explain how social media platforms work, particularly how algorithms are designed to keep users engaged, often by pushing sensational or harmful content.

- Consider Third-Party Tools: There are additional monitoring apps that offer more control over your child’s online experience, such as content filters or geofencing. These tools can supplement Instagram’s built-in controls.

Conclusion: Is This Just PR?

While Instagram’s new features do offer some extra protection, it’s hard not to wonder if this is more about improving their image than actually keeping teens safe.

With Meta trying to keep young users engaged to stay competitive, it’s important for parents to understand that these changes might be more about avoiding regulatory penalties than truly creating a safe space for kids online.

As a parent, it’s crucial to stay involved and make use of the available tools, but it’s also important to know the limitations of these features.

Social media companies need to step up by improving age verification, tweaking their algorithms, and taking more responsibility for harmful content if they genuinely want to keep young users safe.

Was this helpful?

Good job! Please give your positive feedback

How could we improve this post? Please Help us.