It’s easy for tech platforms to turn the other cheek and enjoy the benefits of young users, but what are the implications for kids and parents?

Recently, Mark Zuckerberg published an op-ed in the Washington Post calling for more regulation in technology.

He spoke directly to four areas that have landed Facebook in hot water before:

- Harmful content,

- Election integrity,

- Privacy and

- Data Portability.

Admittedly, the embattled CEO has lost credibility in certain tech circles — leading one early Facebook investor to the coin the term “Zucked” to describe the systemic problems with the platform.

As a result, Zuckerberg’s call for regulation was mostly met with speculation about his motives.

But if we’re focusing on whether Zuckerberg’s call for regulation is authentic, self-serving or reactive — we’re missing a much more important point: the conversation about regulation needs to place more emphasis on young people.

The potential impact of technology on developing minds and unprepared users — kids under 13 — is massive, and deserves more focus from the tech

community at large.

Don’t forget to get this free copy

Though platforms need to comply with the Federal Trade Commission’s Children’s Online Privacy and Protection Act (COPPA), most can still operate in a grey area by simply “ignoring” the fact that children are online.

In reality, many still use a number of different strategies to market directly to this segment, and they’ve seen significant user growth as a result.

So what can be done to better protect our kids?

Understanding COPPA:

How COPPA WorksSince April 2000, COPPA has set out guidelines to protect children’s data.

Companies that violate these regulations face fines of $16,000 USD per affected child.

For platforms where parents create accounts on behalf of children, COPPA has strict requirements to ensure that the person signing up is, in fact, an

adult.

Without getting lost in the details, suffice it to say that the COPPA regulations are strict and have significant impact into how platforms are designed and interact with users — and similar restrictions exist under Europe’s General Data Protection Regulation–Kids (GDPR-K).

These rules are no joke. They make it difficult for us to develop platforms for kids — and that’s a good thing.

When kids are involved, we should be erring on the side of safety. But when large platforms are more interested in daily active user counts than

child safety,

it’s all too easy for them to circumvent COPPA and GDPR-K altogether.

Don’t forget to check out the Digital Parenting Pledge (create tech rules for your home)

The COPPA Conundrum

Common Sense Media reports that the average kid gets their first social media account at age 12.6, while Influence Central estimates are even younger at age 11.4.

Either way, children are signing up before they are technically allowed to be on the platforms — usually around the same time they get their first cell

phone.

Large platforms face a critical choice. When they acknowledge that kids under the age of 13 are on their platform, they need to put processes in place to comply with COPPA.

But if they claim they don’t cater to young users, they can more or less ignore it.

That’s fine in theory, but I think we all know that kids are using these platforms.

Facebook, YouTube, Snapchat and TikTok all derive their value from their user counts.

So implementing COPPA-compliant flows would not only be extremely costly, it would impact their most important metric by cutting out a massive segment.

Check out the Growth Mindset Kit, designed to help children develop positive habits from a young age.

The Social Media Problem:

Parents Love YouTube Kids… Kids Love YouTube These platforms would never admit that they are marketing to children, but Snapchat and TikTok sure have a lot of fun features, filters and music that are attractive to young users.

If you’re a parent, you already know that children have little or no interest in YouTube Kids, especially after they’ve been exposed to the adult version.

According to one survey, YouTube Kids ranks #43 for children’s favourite bands;

YouTube is #1. This is the number one platform for kids under 13.

And while YouTube Kids’ filters aren’t foolproof, there is definitely more accountability than YouTube, which doesn’t have to abide by COPPA.

According to the platform’s Terms of Use anyone under the age of 13 will be removed. Despite this official stance, YouTube’s top earning channel in 2018 starred a seven-year-old “kid ifluencer” named Ryan.

psst, don’t forget to :Download the THE GROWTH MINDSET KIT helping children to stay calm and grounded even when growing up in a tech world.

The problematic trend of kid influencers is a topic for another post entirely, but it’s important to note here that YouTube’s top channel stars a kid who should be excluded by their own Terms of Use.

As with most things, the devil is in the details: there’s nothing illegal about a channel owned by a minor so long as the parents have agreed to share their own personal information.

This loophole makes it technically “okay” for the content to target kids. A quick scan of the top US channels shows there are plenty clearly aimed at kids, and at the end of the day, platforms have very little incentive to stop targeting underage users.

After all, addressing the hypocrisy would be bad for their metrics.

The Lack of Regulation That Affects Children:

Much like the effort to stop tobacco companies from targeting kids, it needs to be externally regulated.

The Ultimate Cost of Loopholes Because of their Terms of Use, social media, gaming and content sites can operate outside of COPPA, with a much lower threshold for parental controls, monitoring and reporting.

They exist in a grey area where they still get to capitalize on the underage market— all the while benefiting from better metrics, higher valuations

and increased ad dollars.

Meanwhile, children are able to connect with strangers, share personal information, and become targets for advertising algorithms.

The underage audience is exposed to adult features like comment boards (which can be pretty toxic places) and thrust prematurely into a world of comparison and validation, and in-app tracking.

Major platforms shouldn’t be exempt from responsibility because they skillfully write their Terms of Use.

You can check the Growth Mindset Kit designed to raise confident kids growing up with tech

The Safe App For Kids :

COPPA is in place to protect young users, and I am happy that Kinzoo is held to that standard in the US. We’re also working towards GDPR-K

compliance in the near future.

But we see this as table stakes — and we are innovating in safety to make our platforms even better.

Adhering to regulations ultimately makes it harder for us to compete as a tech company, but we believe that we need to shift the conversation around what success looks like for kid’s platforms.

As a parent, first and foremost, I want my kids to be safe when they use technology.

What Can Be Done?

Too many things in tech are opaque, and it’s not fair to place the onus of protection squarely on parents.

I’ve been encouraged recently to see advertisers intervene when platforms won’t; YouTube recently disabled comments on channels that feature

children after predatory comments were appearing, but they only took the action after major advertisers including Disney, Epic Games and Nestle threatened to walk.

Platforms can also be subject to penalties for violations.

Don’t forget to get this free copy

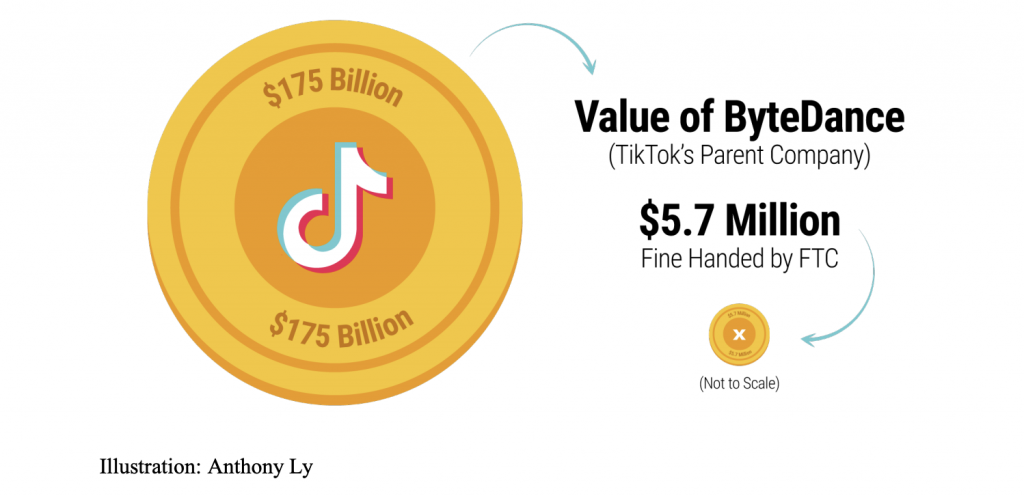

Recently, TikTok was hit with a record $5.7 million fine from the FTC because they were collecting personal information from children under the age of 13.

Though it seems like a large sum, the mamount is a drop in the bucket for TikTok’s parent company, ByteDance, that’s worth an estimated $175 billion.

When a fine isonly 0.003% of the company’s value, it’s hardly enough to be a real deterrent.

In fact, Google has paid more in fines than in taxes in Europe, and the company just looks at them as the cost of doing business.

We live in a world where not even Mark Zuckerberg can deny that the tech industry is under-regulated.

But he’s also proven that tech companies are woefully poor at self-policing. So if platforms won’t hold themselves accountable, it’s time for outside intervention — especially when our kids are involved.

WRITTEN BY

Sean Herman

Founder and CEO of Kinzoo. We aspire to be the most trusted brand for incorporating technology into our children’s lives. Proud

husband and father of two.

Before you go, don’t forget to check out the growth-mindset kit you can use at home or in the classroom.

Was this helpful?

Good job! Please give your positive feedback

How could we improve this post? Please Help us.